An AI engineer’s tips for writing better AI prompts

My four tips for crafting effective prompts, especially when you're using AI for more than just simple answers.

Solomon Fung

AI Engineer at Coda

AI · 6 min read

1. Know that more is more (details, that is).

The more details and context you include in your initial prompt, the better the outcome will be—especially when asking AI to generate content. Large language models (LLMs) are designed to please by following instructions, so the more information you give it, the more it will try and weave that into its answer. For example, let’s say we’re asking AI to help us write a happy birthday email for our teammate, Jane. A basic prompt would be something like “Help me write an email to Jane to say happy birthday.” With this prompt, you’ll get a good enough message to customize further, but you might find it to be a little bland and robotic. The tone may also not be what you were hoping for—sometimes the sentiments can seem trite or even sarcastic.

2. Ask your AI to adopt a persona.

Asking your AI to adopt a persona can help immensely in getting a response in the tone you’re hoping for. By narrowing your prompt to a persona or situation, you’re refining the AI’s understanding to match more closely with actual patterns it has seen across multitudes of training data. The resulting responses should therefore be more accurate to your scenario. You can easily try this out by asking for your response as if from a pirate, or imagining you’re an overzealous IT manager messaging Jane before her trip:

3. Provide examples of what you want.

If you’re using AI for a repetitive data task, you likely need highly consistent results. In this case, specifying the desired format of the answer and including some realistic examples will guide the AI to generate better responses. For example, imagine you have a table of customer messages about your latest product release and want a quick view of the sentiment. You can use Coda’s AI columns to do this automatically by just writing, for example, “Find sentiment in @<email column>.” But an even better prompt would be something like “Analyze the sentiment @<email column>. Return positive, neutral, or negative.” And an even better prompt would include examples, like this:

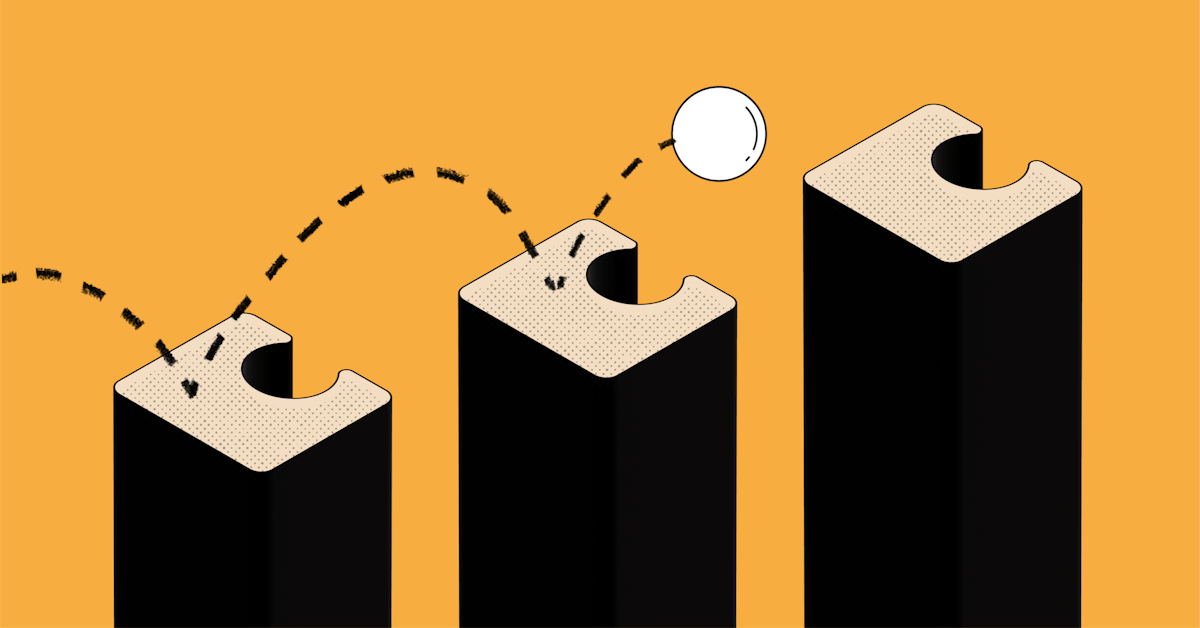

4. Break down complex tasks.

The things AI can do are pretty astounding, but no AI is perfect. When you’re using AI to perform more complex tasks, it can be helpful to break it down into steps to increase the accuracy of its response. Let’s take our PRD example again, and imagine we’re pitching to build a new desktop app. Assuming you’re using an enterprise AI like Coda Brain that has access to your own data (not just the general internet), that might look something like this:- You ask “Find previous explorations about a desktop app.” Coda Brain finds all previous writeups about a desktop app and summarizes them for you.

- You then ask Coda Brain to retrieve data to back up your pitch. For example, “Show me customer feedback about desktop apps,” and Coda Brain will bring back data from your connected tools. That might be win/loss data logged in Salesforce, or customer messages from Intercom.

- Next, you ask “Find a template for a PRD.” Coda Brain delivers your company’s usual PRD template (or a selection, if you have multiple).

- Finally, you ask Coda Brain to do a first draft of the PRD using the template and data it found, which you can then edit as needed.